on

What’s the deal with hash functions in Zero Knowledge?

When exposed to the ZK space, it usually takes little time to stumble upon some alien hash functions for the average crypto engineer - most likely Poseidon1. So, for the curious, in this blog post, we want to shed some light on the history of efficient hash functions in ZK and why we do not simply use our good and standardized friends SHA-2/3.

For an in-depth discussion we refer to the amazing Zero-Knowledge Podcast’s episode 250 with Dmitry Khovratovich, who coauthored many ZK friendly hash functions including Poseidon. The episode aired 2 years ago, and there has been some development since then, so you can still stick around and read the post!

Why do we want something like Poseidon?

Ok, coming back to our original question. Many ZK frameworks come with an implementation of the Poseidon hash function, but why is that? As it turns out, membership proofs and Merkle tree commitments are essential to many ZK use cases. In both cases, many data elements are accumulated using a Merkle tree, and paths from leaves to the root node are later proven using ZK-proof systems. And what is computed in every node of the Merkle tree? Exactly, a cryptographic hash function!

Let's assume we have a leaf in that Merkle tree and want to prove ownership with a SNARK (or STARK, whatever floats your boat). The resulting ZK circuit consists of multiple hash computations until we reach the tree's root. These trees quickly become rather significant in height, and hence, the performance of proof generation highly depends on the efficiency of the used hash function. In fact, this is why no one uses traditional and well-established hash functions, such as SHA-256, SHA-3, or Blake, for ZK use cases. But wait, aren’t those hash functions insanely fast on modern CPUs?

We need to begin a bit further back to answer this question. Most modern ZK-proof systems operate over larger prime fields (e.g., 256-bit in Halo22, 64-bit in Plonky23, and 31-bit in Plonky34), and their performance cost usually relates to multiplications in the circuit. For R1CS constraints, we usually just look at the number of multiplications (in the equivalent representation – more on that later), whereas in Plonkish systems prover cost scales (in a simplified way) with the trace width, trace depth, and the degree of the transition polynomial, which also depends on the multiplicative complexity of the circuit.

Traditional hash functions, however, are usually defined to operate on bits and not prime field elements. This has two significant implications. First, the hash computation's internal state must be at least 256 bits leading to either large trace width or depths in the Plonkish representation. Second, binary arithmetic needs to be simulated in the native prime fields of the ZK proof system, which requires proving each input element is a bit (requiring to proof $x(x-1)=0$ for each bit) and simulating AND/XOR which require a multiplication each ($x_1\ \mathsf{and}\ x_2=x_3 \Rightarrow x_1 \cdot x_2=x_3$, while $x_1\ \mathsf{xor}\ x_2=x_3 \Rightarrow x_1 + x_2 – 2\cdot x_1 \cdot x_2 = x_3$). This leads to a large number of multiplications and therefore large traces, resulting in highly inefficient prover performances.

Consequently, researchers developed new hash functions optimized to be efficient in ZK-proof systems. These hash functions are defined to directly operate over prime fields, counteracting the disadvantages outlined above. Furthermore, additional techniques are introduced to minimize the number of multiplications in the ZK circuit. As a result, modern ZK friendly hash functions can be built from less than 100 multiplications (see e.g., https://eprint.iacr.org/2022/403.pdf). This is already significantly fewer multiplications compared to just proving that inputs are bits for traditional hash functions, ignoring the actual hash computations.

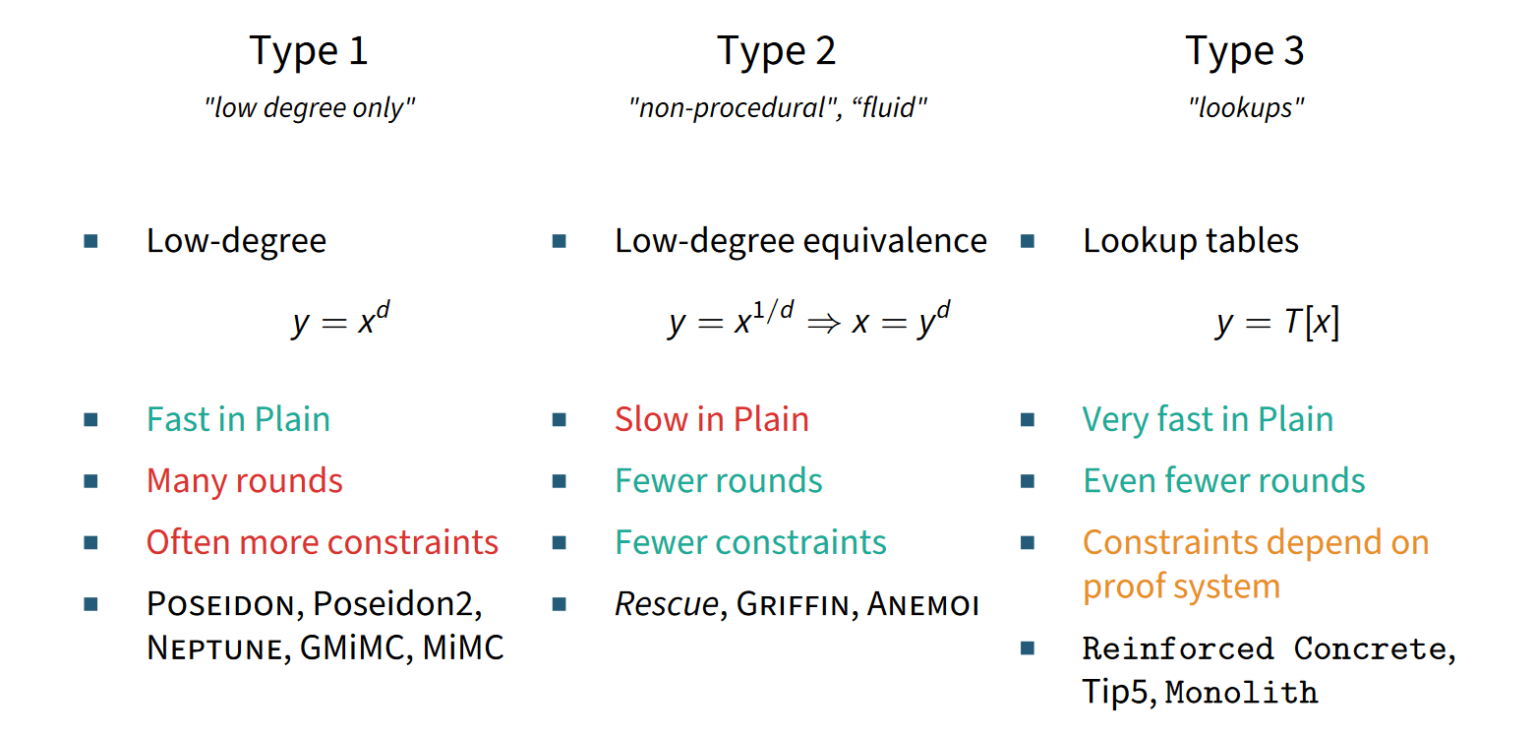

Broadly speaking, the new hash functions can be categorized into three different classes:

- Low-degree round functions

- "Low-degree equivalent" round functions

- Lookup-based round functions

Let's have a closer look at these...

1) Low-degree round functions

In a sense, this class of hash functions may be seen as the first generation of ZK hashes, starting with MiMC. Many hash functions, including hashes built from MiMC5 and GMiMC6, as well as our superstar Poseidon1, its successor Poseidon27, and Neptune8, exclusively rely on low-degree round functions, most commonly constructed from the power map $y = x^d$ (usually d is either 3, 5, or 7, depending on the prime field). While this approach leads to a relatively small number of multiplications per round, they also require many rounds to be secure. In total, this still allows for more performance than using standardized hash functions operating on bits like SHA-2, while being relatively straightforward to analyze in terms of cryptanalytic attacks.

2) Low-degree equivalence

A second class of hash functions relies on the following observation. In a ZK circuit, one does not have to directly model a system of constraints for the given circuit. It suffices to model a different constraint system, which is satisfied if and only if the original constraint system is satisfied. Ok, this can be hard to digest, but it gets clearer with an example...

An example: Assume the circuit computes $y = x^{1/d}$. Then a constraint can be built that directly proves $y = x^{1/d}$. Another approach is to prove $y^d = x$ which is an equivalent representation. If d is small and the prime field is large, then $1/d$ is a considerably large exponent requiring many multiplications to compute. However, $y^d = x$ is an equivalent constraint of low degree, suitable for efficient representation in ZK circuits.

Designs using the power map $y = x^{1/d}$ require fewer rounds for security and usually have a more efficient representation in proof systems than designs relying solely on low-degree round functions. This is big news; our objective is to improve the performance inside a ZK Circuit.

However, the improved speed comes with a price. When computing a hash outside the circuit (i.e., in plain), one must directly compute $y = x^{1/d}$, which is significantly slower than $y=x^d$. Thus, these designs usually have slower plain hashing performance compared to, e.g., Poseidon.

Hash functions following this strategy include Rescue9 (and Rescue-Prime), Anemoi10, and Griffin11. Furthermore, an alternative approach using the Legendre symbol, which, however, leads to more inefficient constructions, is Grendel12.

Let's summarize what we learned so far

Our old friends SHA-2/3, Blake, etc., are fast in plain but have high cost in a ZK Circuit due to its arithmetic nature. MiMC was the first construction utilizing low-degree round functions ZK friendly hashes, a research direction which resulted in Poseidon and a significant performance boost compared to traditional hash functions when used inside a ZK circuit. Researchers developed hash functions built on low-degree equivalence to further improve in-circuit performance, resulting in Rescue (and Rescue-Prime) and Griffin. While Griffin is cheaper than Poseidon inside a ZK Circuit, this comes at the cost of more expensive hashing in plain.

The million-dollar question is, can we get speed equivalent to SHA-3, or similar, in plain, but can still improve the cost inside a ZK circuit?

In fact, there is an answer to this question! And the answer is yes (with an asterisk).

3) May the speed of SHA-3 be with you

A third and very recent class of hash functions follows the trend of modern ZK-proof systems and uses lookup tables for cheaper computations. The main observation is the following. When operating over large prime fields, one can decompose field elements into smaller ones (e.g., of size 8-bit), perform a table lookup, and compose the result back to a field element. This approach has several advantages:

- The table lookup is fast in plain and comparably cheap in ZK circuits (when lookup arguments are supported by the proof system, this is the asterisk above).

- The resulting security (especially against algebraic attacks) is high; thus, only a small number of rounds is required. The downside is, of course, that the underlying proof system must support lookup arguments to enable these kind of hash functions.

The first design following this approach was Reinforced Concrete13, which is highly optimized for large prime fields of ~256 bits. It has significantly faster plain performance compared to other hash functions but is still considerably slower compared to, e.g., SHA-3. The follow-up design Tip514 builds on this concept and proposes a hash function for a special 64-bit prime field which is used in e.g., Plonky2. In contrast, Monolith15 generalizes the idea to further fields (e.g., the same 64-bit field and the 31-bit as used in Plonky3) and for the first time achieves a plain performance comparable to SHA-3.

As a short summary of this overview, have a look at the figure below.

We hope this post helped you gain an overview of hash functions that are used in the zero-knowledge space. If you are still interested and would like to go into more depth, expect a deep dive into a few select hash functions, their history, design and properties in future blog posts.

Disclaimer: The author of this blog post is a co-designer of Griffin11, Reinforced Concrete13, and Monolith15. Our company TACEO has close ties to the IAIK institute of Graz University of Technology which (including alumni) is involved in the design of 8 out of 12 hash functions named in the listing above and our cofounders were involved in designing 6 of these hash functions, including Poseidon1.

Monolith: https://eprint.iacr.org/2023/1025

Reinforced Concrete: https://eprint.iacr.org/2021/1038

Poseidon: https://eprint.iacr.org/2019/458

Poseidon2: https://eprint.iacr.org/2023/323

Rescue: https://eprint.iacr.org/2019/426

Griffin: https://eprint.iacr.org/2022/403

Anemoi: https://eprint.iacr.org/2022/840

Neptune: https://eprint.iacr.org/2021/1695

Grendel: https://eprint.iacr.org/2021/984

Plonky3: https://github.com/Plonky3/Plonky3